Using the RTP Media APIs

Table Of Contents

Most of the time and for my use cases, I still use mainly WebRTC 1.0 APIs: The APIs based on GetUserMedia RTCPeerConnection, MediaStream and MediaStreamTrack.

But for about 4 years now, there are other standardized APIs that are filed in the chapter RTP Media API.

In this article, I used them to do manipulations mainly around stopping or muting the video stream. I was interested to compare the different ways and the underlying consequences on the remote end.

I would like to have a “mute” solution where the camera is turned off during the mute and that works smoothly without having to send an additional signaling message to the remote peer. So the remote peer should be able to react from the media side or from the negotiation side only.

Setup used

For that article, I used the following devices

Microphone: Rode NT-USB Microphone which works in stereo

Microphone: Konftel Ego that mixes a microphone and a loudspeaker

Camera: Razor Kiyo which has an autofocus

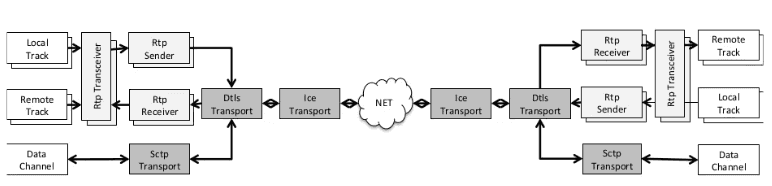

WebRTC + ORTC = WebORTC

RTCRtpTransceiver among other APIs comes from the ORTC API which was originally a counter-proposal to the SDP-based negotiation proposed in the JSEP protocol. These APIs were first implemented in the old Edge browser by Microsoft.

Then, the ORTC APIs were integrated into the WebRTC 1.0: Real-Time Communication Between Browsers specification.

The objectives were to:

Hide the SDP from being manipulated: ORTC considers the SDP a “private API” that should not be exposed or modified directly

Provide more APIs to directly access and control the RTP engine

The ORTC APIs cover the gap between the MediaStreamTrack and the RTCPeerConnection by giving more control and visibility on how things are done.

With these API, developers have new possibilities to act directly on the media (eg: add a track, mute it, change the size of the video, select the codec, …) as well as to access to the underlying transport used, based on RTCDtlsTransport and RTCIceTransport.

But complexity came from the fact that both sets of APIs (WebRTC 1.0 + ORTC) are all available to developers that can mix and match. So for newcomers (myself included), it might be difficult to finally understand what to use and under what circumstances.

Don’t touch the SDP anymore?

As mentioned, ORTC has defined a new set of APIs to globally avoid ‘munging’ the SDP.

“Munging” the SDP (or SDP mangling) is the action of modifying the contents of the SDP before sending it to the remote party and before using it locally.

SDP is used during the negotiation part of the call which is the messages flow sent between two peers or a peer and a server that allows the two parties to understand together around the media to use.

Note: Negotiation is done using the signaling protocol between the two entities that must be “in place” when the negotiation begins.

The negotiation is necessary each time the contract (SDP) that binds the two parties changes. So there are renegotiations.

Before the “ORTC API” was implemented, some actions were performed by “munging” the SDP: For example, if you wanted to use VP9 instead of VP8 when initiating the call, you had no other possibility than to replace some strings into the SDP:

// Video using VP8m=video 9 UDP/TLS/RTP/SAVPF 96 97 102 122 127 121 125 107 108 109 124 120 39 40 45 46 98 99…// Replaced by video using VP9m=video 9 UDP/TLS/RTP/SAVPF 98 99 96 97 102 122 127 121 125 107 108 109 124 120 39 40 45 46…

The problem here is that modifying the SDP is a source of errors.

That’s why, in the following manipulations I did, I never manually modified the SDP but rather used the alternative ORTC APIs..

For that, I set the negotiationneeded event to trigger the negotiation without any processing as in that example: By doing this, every time a negotiation is needed, the application initiates it.

let pc // An existing peer connectionconst offerOptions = {offerToReceiveAudio: true,offerToReceiveVideo: true,iceRestart: true,};const onCreateOfferSuccess = () => {// Continue negotiation with setLocalDescription, setRemoteDescription...};const onCreateOfferFailure = () => {// Discard call in case of error};// Trigger a new negotiation each time it is neededpc.addEventListener('negotiationneeded', () => {pc.createOffer(offerOptions).then(onCreateOfferSuccess, onCreateOfferFailure);});

So, let’s see these manipulations!

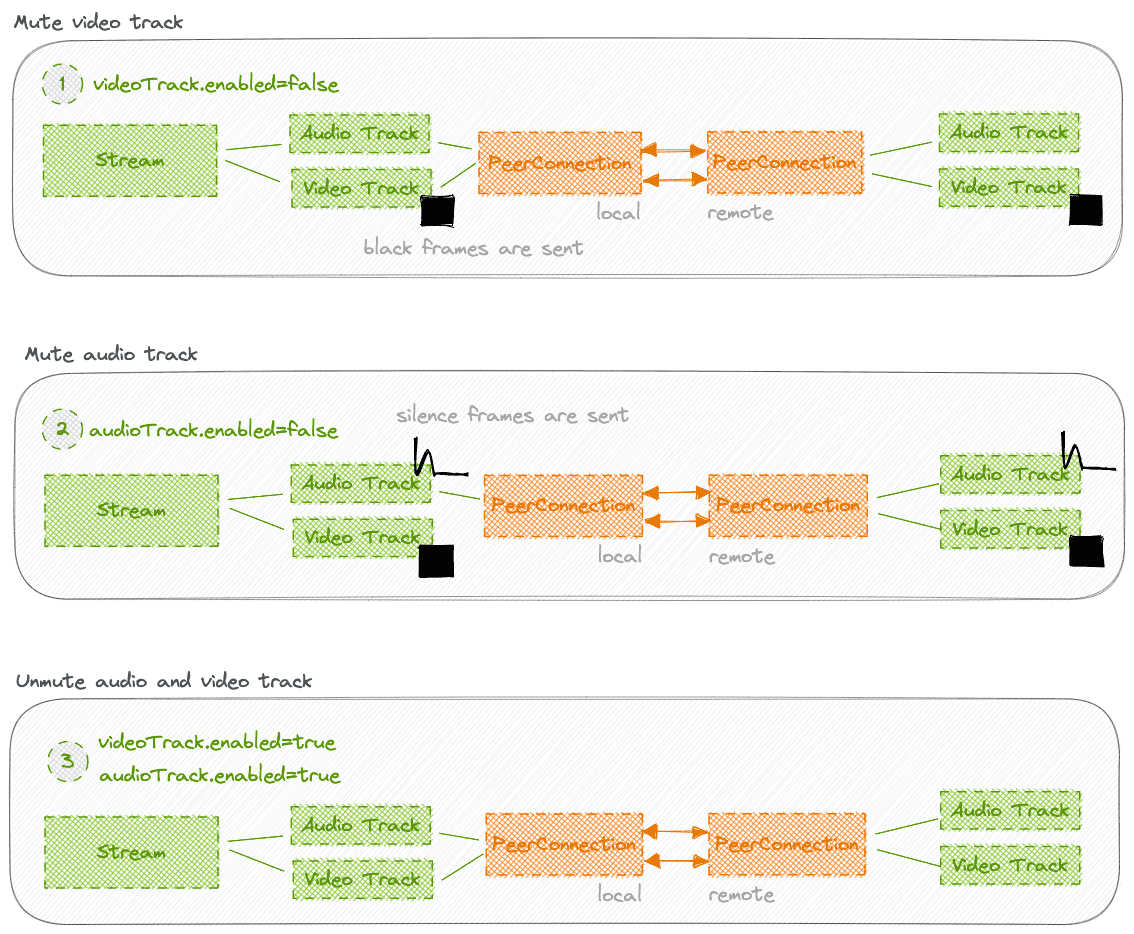

Mute/unmute at track level

In this first manipulation, I created an audio+video call. Then I muted and unmuted the video and the audio streams at the track level, using the enabled API.

This is the “old-way” of implementing a mute/unmute function.

To notice:

100% based on WebRTC APIs 1.0 (No ORTC API used)

The Media continues to be transmitted to the recipient using black frames (video) and silent frames (audio)

The remote peer is not aware of any changes: neither through the signaling channel nor through the media itself and therefore cannot react. The stats API must be used to detect this change or additional signaling messages should be sent.

The camera was not turned off during the mute (the light stayed on).

Track based muting and unmuting is easy to implement but is less and less used because external computations on the remote side are required to detect this pattern. In case of complaints, it is more complicated to debug.

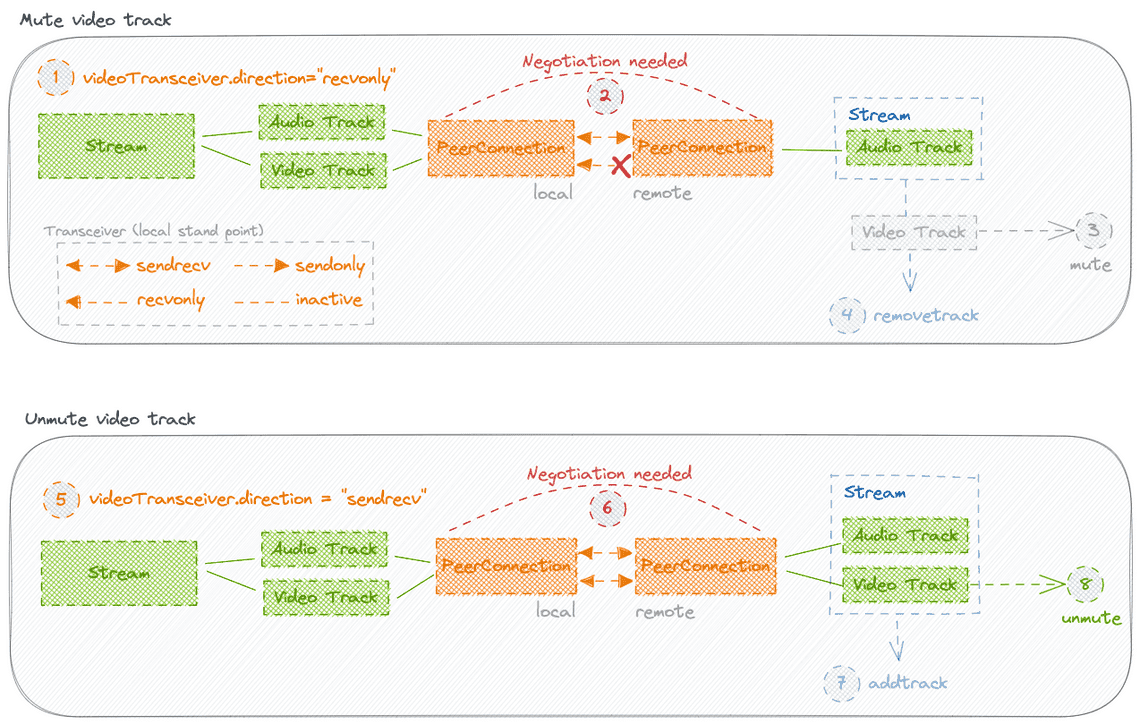

Mute/unmute at transceiver level

In this manipulation, I reused my audio and video tracks. Then I muted and unmuted the video stream at transceiver level which means using the direction API.

To notice:

80% based on ORTC APIs + 20% based on WebRTC APIs 1.0

The remote peer is aware of changes: either through the signaling channel (

negotiationneededevent is triggered when changing the direction) or through the media itself (removeTrackandaddtrackevents are triggered at stream level,muteandunmuteevents are triggered at track level).The camera was not turned off during the mute (the light stayed on).

No media sent when muted (when

directionis “recvonly”).

Using the transceiver for muting and unmuting is a good and easy solution. But for the initiator, the camera is not turned off because the video stream is still captured locally.

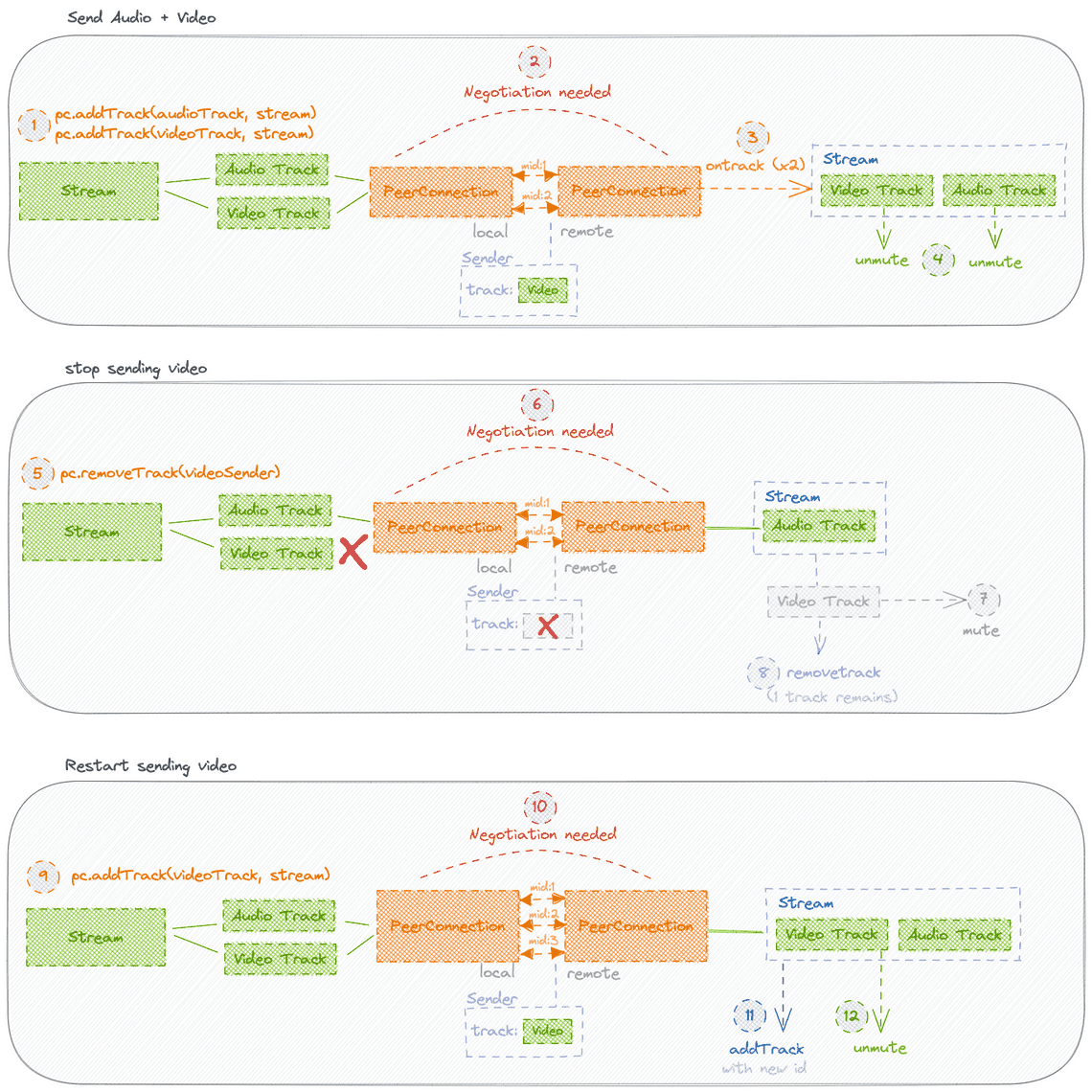

Add/remove track at peer connection level

Here, I started by adding an audio and video tracks. Then, I removed the video track temporarily.

To notice:

95% based on WebRTC APIs 1.0 + 5% based on ORTC APIs (Due to usage of

RTCRtpSender)The

RTCPeerConnectionand theMediaStreamTrackinterfaces are not the only APIs needed here, theRTCRtpSenderinstance created must be used too: theremoveTrackAPI takes a sender as a parameter and not tracks…The remote peer is aware of changes: either through the signaling channel (

negotiationneededevent is triggered when using the functionsaddTrackandremoveTrack) or through the media itself (removeTrackandaddtrackevents are triggered at stream level,muteandunmuteevents are triggered at track level).The number of transceivers increases: A new

m=videoline is added on each call toaddTrack. The existingRTCRtpSenderis not reused. SDP grows indefinitely.The camera was not turned off during the manipulations (the light stayed on).

Adding and removing streams using only the

RTCPeerConnectioninterface is not as good because existing transceiver is not reused and so SDP content grows.

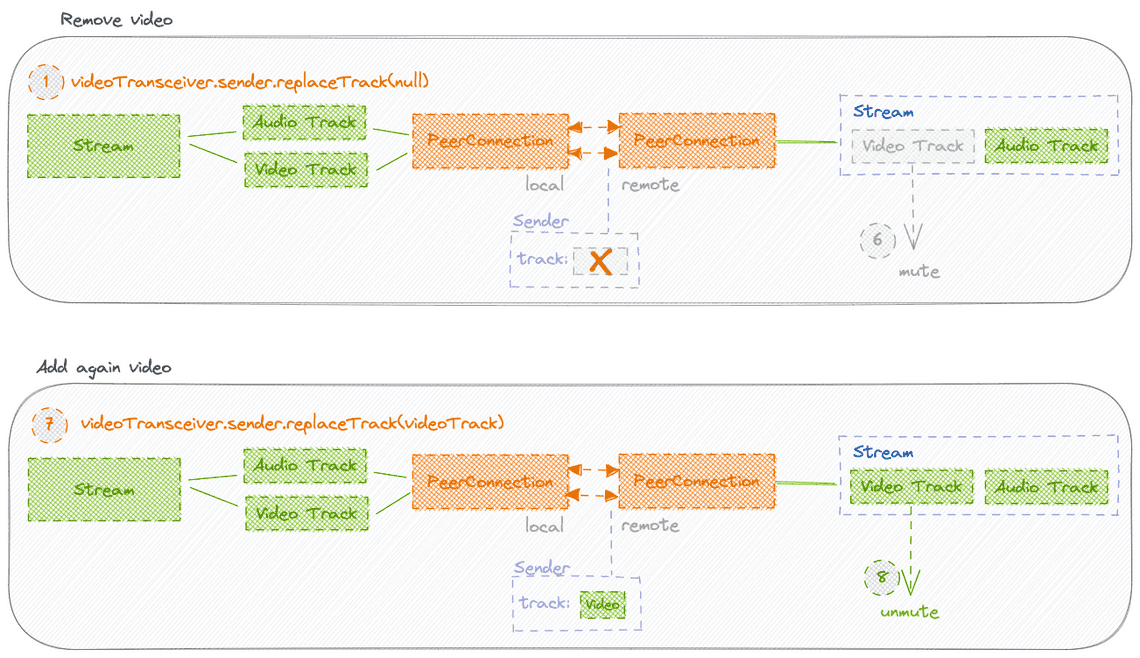

Replacing track at sender level

In this manipulation, I restarted from my audio and video tracks. Then I temporarily discarded the track using the replaceTrack(null) API and finally reactivated it again using the same API.

To notice:

80% based on ORTC APIs + 20% based on WebRTC APIs 1.0

No media sent once track has been replaced (null).

Depending on the browser, the remote peer is aware of changes (Chrome) through the media itself (

muteandunmuteevents are triggered at the track level). The negotiation depends on the track added (not necessary).Looking at stats, a new media-source report is created on local side but with the same trackId. No change on the remote side.

The camera was not turned off during the manipulations (the light stayed on).

The existing transceiver is reused: No new one added

The behavior is browsers dependent: There are no

muteandunmuteevents in Firefox/Safari, so there is no way to be aware of this change except by looking at real time statistics.

As this solution is not homogenous between browsers, it is preferable to combine it with other solutions such as changing the direction at the same time: the remote counterpart will be informed by a new negotiation

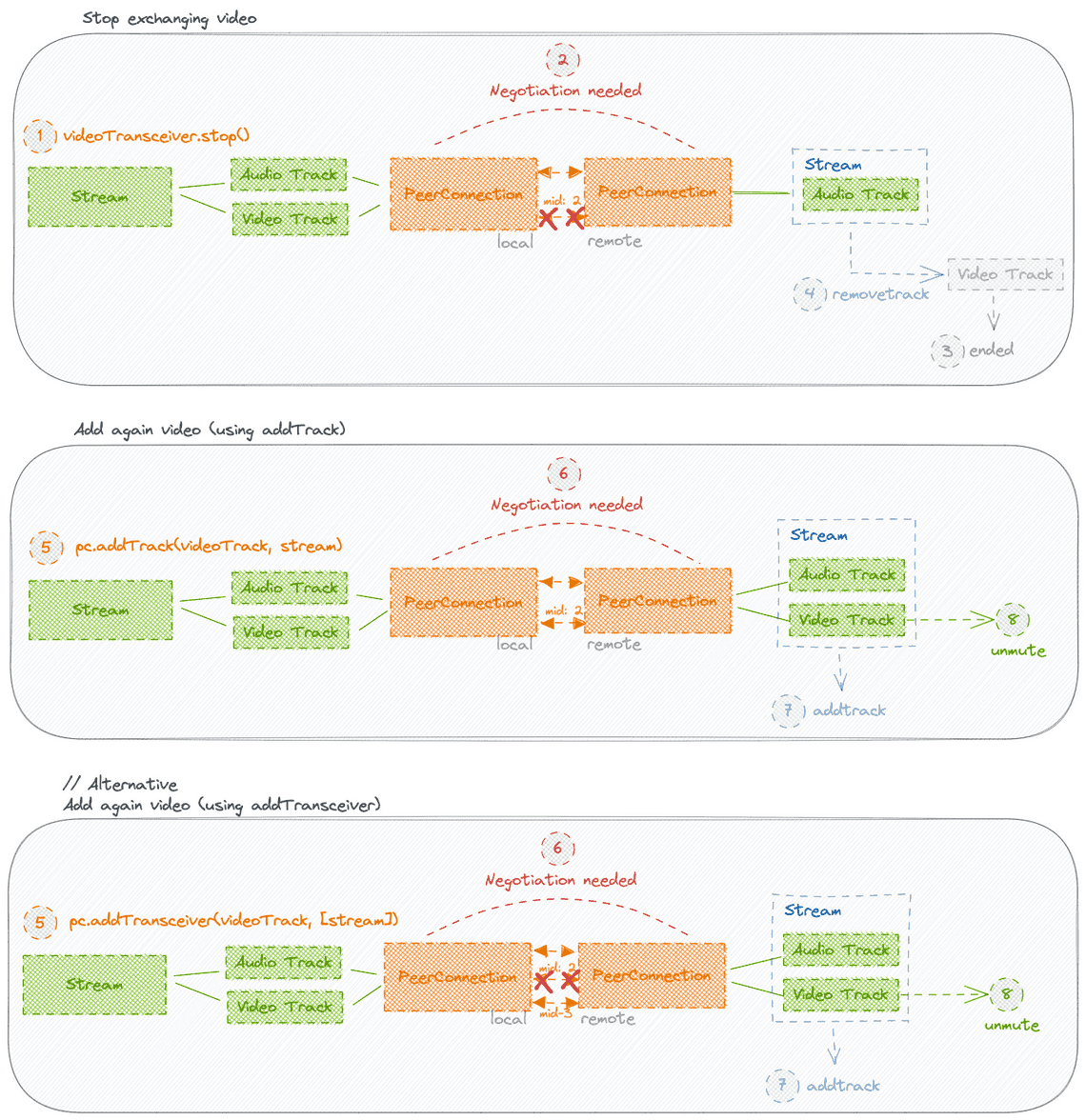

Stopping a transceiver

Still my audio and video tracks. I stopped the video transceiver. Then, I wanted to put the video back.

To notice:

80% based on ORTC APIs + 20% based on WebRTC APIs 1.0

The remote peer is aware of changes: either through the signaling channel (

negotiationneededevent is triggered when usingstop()andaddTrack) or through the media itself (removetrackandaddtrackevents are triggered at stream level,endedandunmuteevents are triggered at track level).The video track can’t be restarted using the

replaceTrackAPI at the sender level because the transceiver has been stopped.To overcome that, the

addTransceiverAPI could be used: A new transceiver will be created. Or theaddTrackAPI which reuses the existing transceiver.The camera was not turned off during the manipulations (the light stayed on).

The behavior is browsers dependent: In Firefox (106), the track is not removed from the transceiver which causes an error when using the

addTrackAPI directly. 4 transceivers are present after the scenario… In Safari 16, theaddTrackandaddTransceiverAPIs have the same behavior, they created a new transceiver. In Firefox/Safari, thestoppedproperty goes to true and themidproperty goes to null, which is not the case in Chrome.

Here again, the result obtained is not homogenous. Chrome

addTrackis surprising because it seems to reuse the existing transceiver whereas it has been stopped (mid doesn’t change). Not clear for me, in which cases, I have to stop a transceiver.

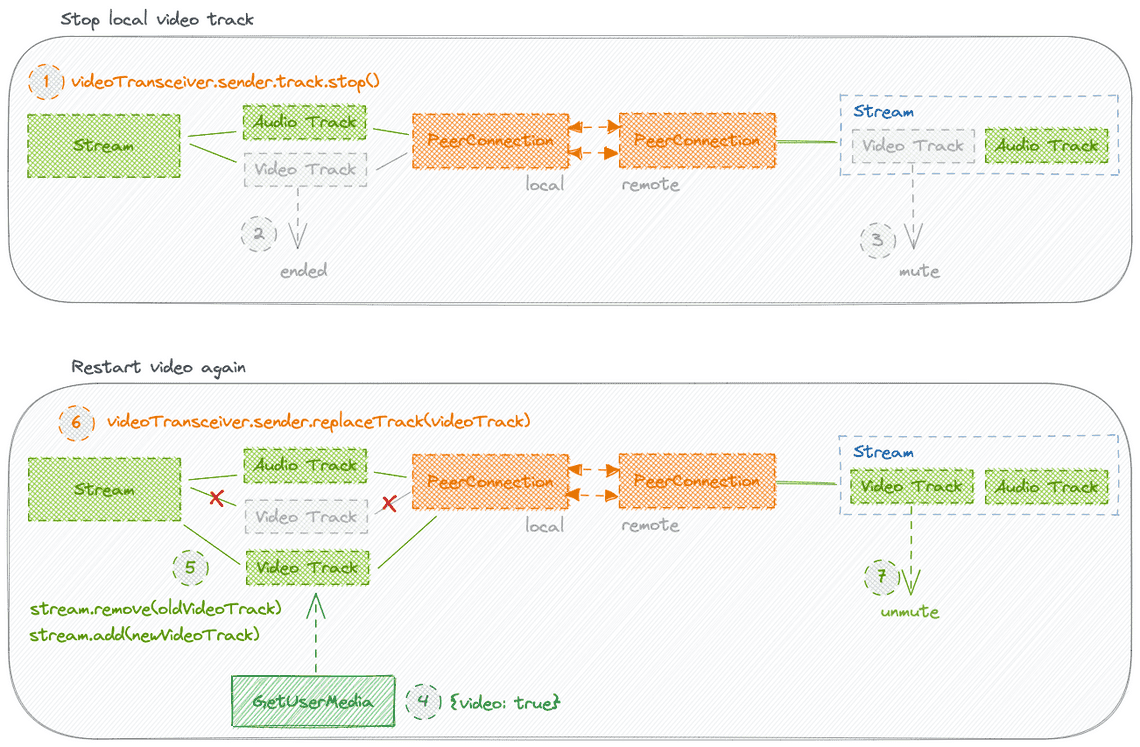

Stopping the local track and restart it

With my audio and video tracks, here, I stopped the video track temporarily to not capture even locally. Then I took a new stream to share it.

To notice:

90% based on WebRTC APIs 1.0 + 10% based on ORTC APIs

The camera was turned off during the manipulations.

The remote peer is aware of changes (Chrome only) by the media itself (

muteandunmuteevents are fired at track level). No negotiation has been required.The behavior is browsers dependent: In Firefox 106 and in Safari 16, when stopping the track locally, the remote peer is not aware of the changes: the track doesn’t fire an event and stay

unmuted.

Even if the scenario works, this solution is not enough because the remote ends can react in Firefox and Safari.

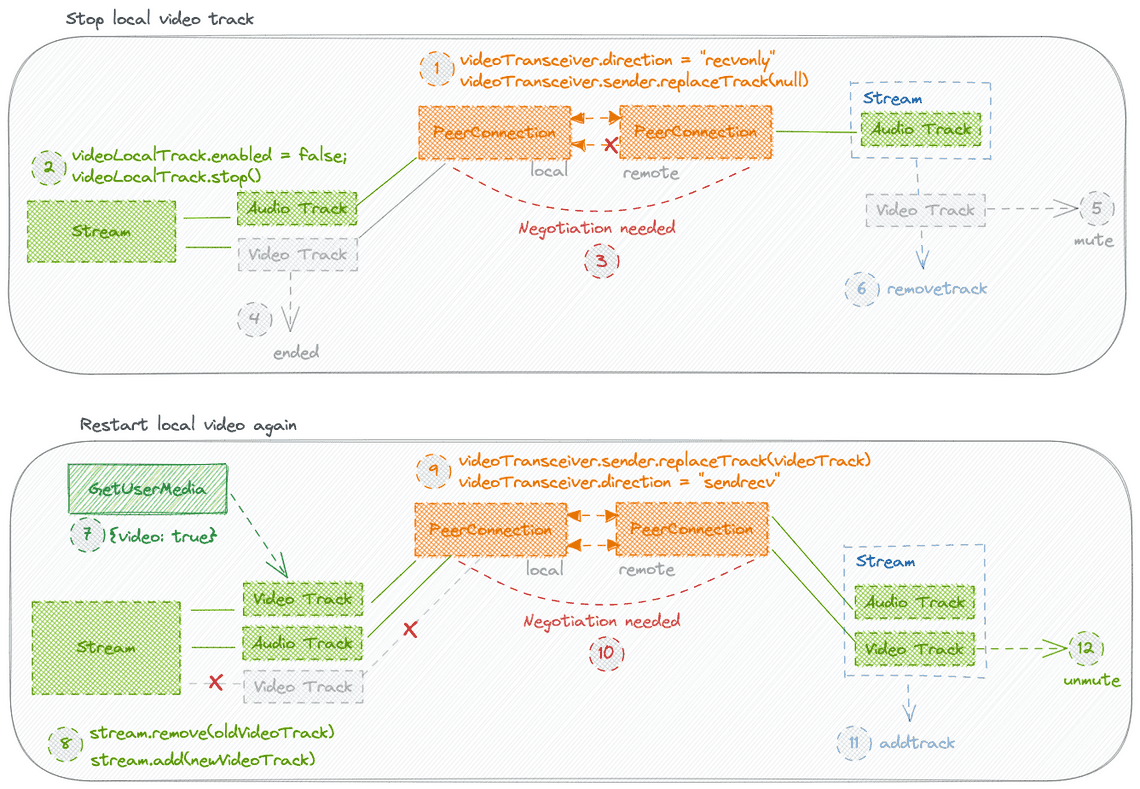

A cross browsers workable solution

To summarize, despite all the APIs tested so far, all the proposed solutions are not 100% suitable: Either the solution is not cross-browser, or the camera remains on

My conclusion (feel free to share if you’re not on the same line) is that I need to mix together several APIs or solutions to obtain the desired outcome. Here is the result.

To notice:

80% based on ORTC APIs + 20% based on WebRTC APIs 1.0

The solution is cross browsers: The same behavior is obtained from each browser.

The camera is turned off during the “mute” period. The local stream is then rebuilt with the new video track (the old one is removed).

The video transceiver has been updated to remove the stopped track and the direction has been changed accordingly. Then on “unmute”, it is updated again (same transceiver is reused).

The remote peer is aware of changes: either trhough the signaling channel (

negotiationneededevent is triggered when changing the transceiver direction) or through the media itself (removetrackandaddtrackevents are triggered at the stream level,muteandunmuteevents are triggered at the track level).

Based mainly on the ORTC API, this solution meets 100% to the needs. Here, the WebRTC APIs 1.0 are only used for the negotiation and the access to the local stream (directly or through events).

ORTC: The missing APIs?

ORTC APIs provide more control and avoid to munge the SDP. But, usage is not so easy. Here are some problems I faced:

Transceivers do not have any properties that identity the type of tracks transported (audio or video). For example, when you remove a video track (eg:

replaceTrack(null)), how do you remember that this transceiver was for video? I either have to keep a pointer to the sender/transceiver itself or deduce it from the list of existing transceivers. Not cool! Having the possibility to set a custom name to an existing transceiver could be an easy way for the application to find it later…During my tests, I always had to look at the number of transceivers created. I feared to see more and I considered that solutions where the number of transceivers increased were not correct or at least not optimal.

Documentation on ORTC APIs is very minimal: Only Mozilla (Didn’t find for others) took the time to give some explanations on his blog: The evolution of WebRTC and RTCRtpTransceiver explored are two excellent articles that helped me to interpret my test results.

I tested the sender

setStreamsAPI but didn’t find any cases where it was needed. Anyway, this API is not available in Firefox…

It took me a while to get the hang of the ORTC APIs related to stream manipulation. Combined with the other existing ORTC APIs (not used here), they are the missing part I needed to improve my code base and turn the “tips and tricks” into a more rigorous development mode. But as mentioned, there are some implementation differences in browsers that are not yet satisfied…