WebRTC API landscape

Table Of Contents

WebRTC APIs have evolved since the first day. More and more APIs are accessible and they allow to have a better control on the communication. This article focuses on the skills to learn around the media to be able to develop exciting features…

Simple to start…

Starting with WebRTC is not really complicated… By following a tutorial, watching a video or looking to the code of a sample, any developers can understand the basics and put in place a nice P2P demo. Moreover, by using an SDK from a WebRTC Cloud provider, having an online audio and video conferencing is accessible in some hours of coding.

Ok, but end-users want more…

They want the possibility to share their screens, to add and remove their camera, to mute and un-mute their microphone… Some want to blur their environment, I want an immersive experience with spacial sound and others want a funny hat over their head or the same voice as Dark Vador…

The common behavior is that all want to hear clearly their interlocutors and all want to ‘trust’ the application in order to share their media.

Putting in place these features and developing a such application is not easy and will take a longer time. The time to understand how it can be possible to do these things and the time to develop and qualify the application.

The two initial hours spent to build a demo seem to be far away now. The big climb can start because the WebRTC training curve is getting longer and steeper…

…and then more APIs to learn

Some years ago, it was easy to list and learn the JavaScript WebRTC interfaces and methods available from the browser: getUserMedia, RTCPeerConnection, MediaStream, and MediaStreamTrack. Having in parallel some skills on HTML for using the <video> and <audio> elements and any developers were ready to deep dive into WebRTC to have a Peer-to-Peer call.

Note: I put into silence, the learning curve needed to understand the JSEP protocol and the associated server part needed as well as the deployment of the infrastructure equipments such as STUN and TURN.

At the same period, some developers started to use the HTML <canvas> element to be able to take snapshots from the camera’s stream, to save them to files or to add filters on the stream before sending or displaying the video. The Canvas API is part of the `HTML5 specifications’ and a complete support was available in Safari 4, Firefox 3.6 and Chrome 4. It was only available in IE 9 but as IE didn’t have the support of WebRTC…

This was the first corner to the need of having more things that just some APIs…

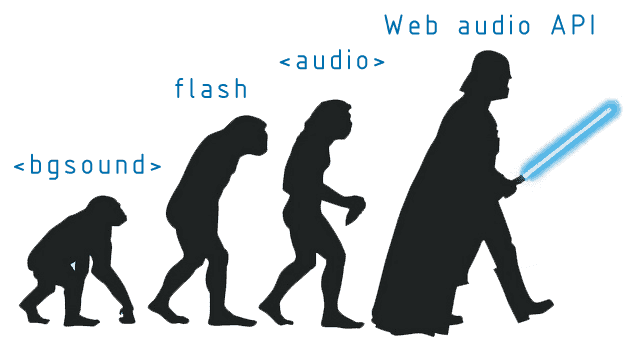

Then, using the Web Audio API, developers wanted to display a graphic equalizer or to change the audio signal itself. The Web Audio APIs propose a lot of new interfaces and APIs to manipulate the audio stream. Understanding the core concepts behind takes time and it was not so easy as for playing with the HTML Canvas element mainly because working on audio and sound is more complicated than working on graphical aspects: Here you need to have devices, to have materials that can compare or analyze the output signal additionally to your ears. And in case of mistake, be ready to cut the input signal :-)

A brief introduction into Web Audio API

For a Web developer, the need to understand the Audio Context and how to route the signal is crucial:

- Getting the audio stream from a source node like a microphone: OK, already known

- Connecting this source node to an audio node that performs an operation to the signal: New task

- Connecting the output of that audio node to a destination node that plays the result: OK, already known

Additionally, the Web Audio APIs bring the possibility to have other kinds of source nodes that is not really dependent on WebRTC: it can be an audio file by using the AudioBufferSourceNode interface, a single unchanging value by using the ConstantSourceNode interface or an HTML Element such as <audio> or <video> by using the MediaElementAudioSourceNode interface.

The complexity behind the Audio Web APIs comes when connecting the audio node responsible to change the audio signal because there are a lot of possibilities: going from the way to add gain to the signal using the GainNode interface, the possibility to delay the audio using the DelayNode as well as some more complex tasks such as to pan the audio stream left or right using the StereoPannerNode or to split the audio stream into several mono channels: You need an audio diagram that represents the audio flow and all treatments done.

As it was not enough, it is now possible to deal with the video part in the same way as when using the Web Audio APIs but by doing the complexity ourself: Using Insertable streams, the video signal can be intercepted before being sent. Each part of the video (ie: the frames) is accessible and can be modified.

In some words, it works like that:

Using the

MediaStreamTrackProcessorinterface, the video stream can be collected from a camera (ie: from the video track exposed)Then using a

TransformStreamthat defines the video treatment, the original video can be modifiedFinally, the new video generated is ‘piped’ to a

MediaStreamTrackGeneratorthat is able to play the result to a player such as the HTML<video>element.

Insertable streams come with several complexities:

The first complexity here is that this is up to the developer to build the treatment. There is no default video node such as a backgroundRemovalNode as defined by the Web Audio Apis. Here, the developer will have to code the video treatment.

The second one is that these treatments can’t be done in the main thread due to the cost and so need to be done from a Worker Thread.

The third is that the API proposed by browsers today will change in a near future as noted by the W3C in the working draft: The API presented in this section represents a preliminary proposal based on protocol proposals that have not yet bee adopted by the IETF WG. As a result, both the API and underlying protocol are likely to change significantly going forward.

Note: A first draft of the WebRTC Encoded Transform has just being published by the W3C. This specification addresses not only the video transformation but all transformations that can be done on the MediaStreamTrack.

Audio and Video processing skills

This article put in evidence that a WebRTC developer needs now to have knowledge on how audio and video signal is processed and how he can change that signal. Because if these APIs are used in the right way, they can open the door to a multiple of new scenarios, new use cases and so differentiators. Having only some basics skills on SIP or on codecs is no more enough to understand that!

Playing first with the Web Audio API is a good start because it’s like playing with Lego. You arrange the different audio boxes depending on the effect you want to have. Then going to Insertable Streams is needed to have more control of the stream. The intermediate step is to discover if not done the Web Workers in order to understand where and how to put heavy treatments to a different thread.

API landscape

In order to have a global picture of all these APIs, I tried to extract them from Chrome and to link then together in a way to know which element can give access to another.

As these APIs are changing, release after release, I developed a little tool that inspects each interface and automatically extracts the prototype: (here, the list of properties, methods and events accessible from a JavaScript application).

Then, I use Mermaid to display the graph.

And finally, I added the possibility to save the graph into a SVG file.

What is cool is that this tool is able to collect the same APIs from any browsers in order to compare this WebRTC API Surface.

Here is the picture extracted from Chrome M96 with the experimental features flag set to enabled.

The file can be downloaded here: WebRTC complete APIs for Chrome M96

This picture help me personally to navigate inside the APIs and to quickly find and identify how I can have access to a specific property or what is the name of the method I should use. Additionally, it points to me the gap between Chrome, Firefox and Safari in term of APIs.

Conclusion?

My own conclusion on that is that developing and understanding WebRTC and the possibilities we can do with require more and more skills and time. And so becoming a WebRTC specialist is like becoming a Ninja:

WebRTC developers = Human + WebRTC core skills + Signaling skills + Security skills + Audio/Video signal processing skills + Advanced JavaScript skills (Worker or Web assembly) + IT/Network skills + …

My last feeling on that is if I can remove the noise around me and blur my screen to hide the sandy beach, how I can be sure to speak to the right people and not to an avatar or worst to someone who has stolen an identity like in the movie Face/Off but with a virtual surgery :-)